A gunshot is fired. Depending on where in the city it is, the sound might not just be picked up by human ears. By early next year, almost 130 square miles of Chicago will be monitored for gunshots by mechanical ears as well, via a technology called ShotSpotter.

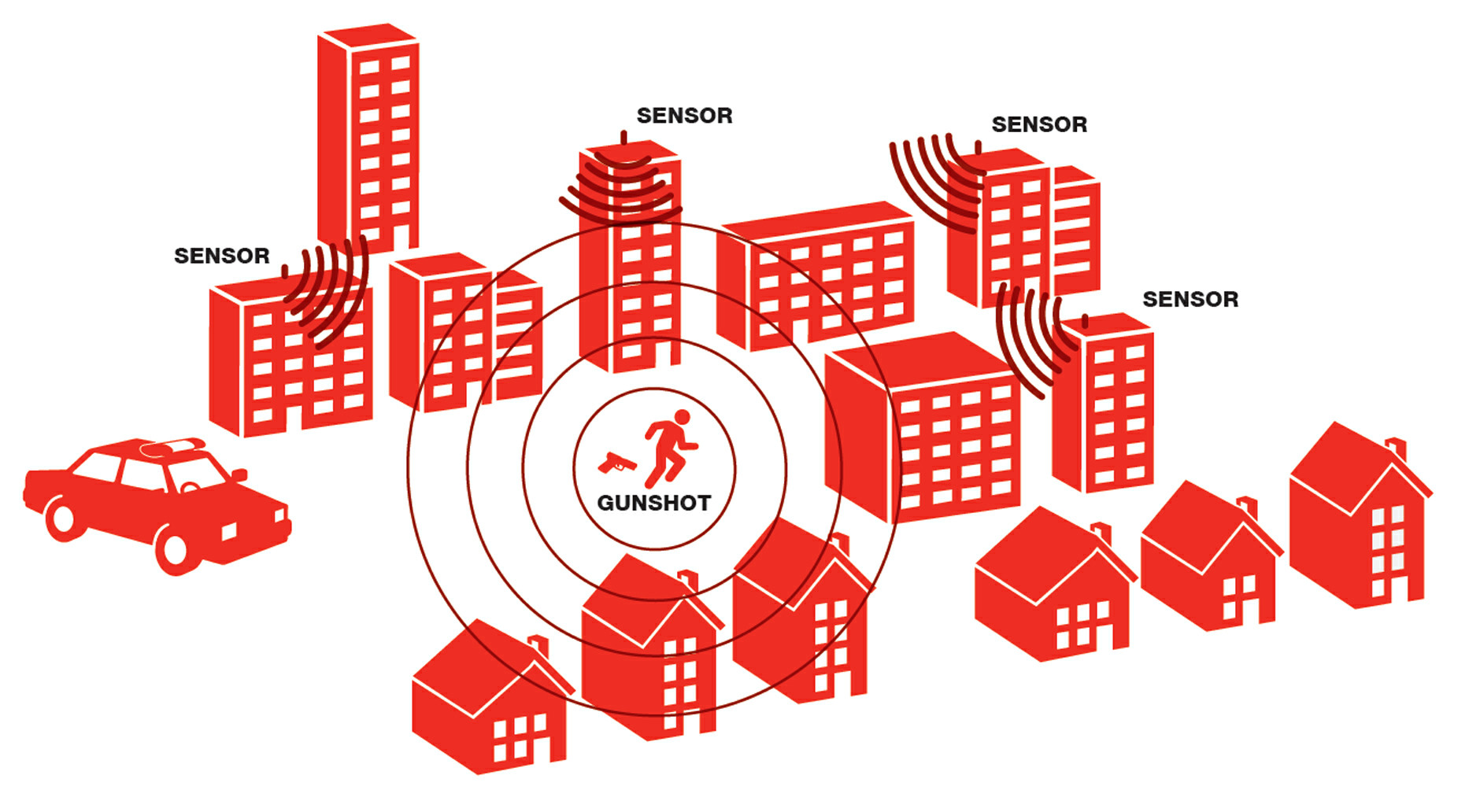

ShotSpotter sensors—which have already been installed on rooftops and telephone poles in six districts and will be installed in another six by mid-2018—register the boom. Three sensors triangulate the location of the sound’s origin, within a radius which SST Inc., the technology’s manufacturer, purports to be no more than twenty-five meters from the actual shot. SST’s Californian headquarters receive a recording, where it’s determined if the sound was actually a gunshot.

If confirmed, metadata—including information like location and time of the shots, rounds fired, and the direction that the shooter was traveling in—is pinged back to the smartphones of Chicago Police Department (CPD) officers in the appropriate district, where they’re deployed to investigate the shooting. Ralph Clark, CEO of SST Inc., has claimed that this whole process takes forty-five seconds and supplies police with accurate information over eighty percent of the time.

ShotSpotter is one of several high-priced predictive and surveillance technologies that the CPD is rolling out this year, packaged in the form of Strategic Decision Support Centers (SDSC). SDSCs are intelligence hubs located in a police district, accessing not only its ShotSpotter network, but also its Police Observation Devices (PODs)—a system of cameras installed in 2003 that are now being integrated with ShotSpotter technology—and armed with a predictive policing software called HunchLab. The first SDSC was implemented in Englewood (7th police district) in January. In 2016, Englewood had some of the highest incidences of gun violence, making it a tier one district—tiers denote levels of violence, with one signaling the highest. Since then, the technologies have been launched in five more tier one districts like Englewood, and installation has begun in six tier two districts.

The CPD has been quick to tout the success of the SDSCs: that homicide rates are down in all six of the already technologized tier one districts from what they were in 2016. But 2016 was an exceptional year, so much so that, excluding 2016 from consideration, 2017 is shaping up to be the most violent year since 2002. It’s important, then, to explore the efficacy of these technologies, especially given their price tag—an estimated $1.5 million per district—and the controversy they’ve drawn in other cities, particularly ShotSpotter. Controversy has not been limited only to questions of the technologies’ effectiveness, but also to the legal and ethical implications of their use.

Chicago itself has had dissatisfactory results with SST Inc. before: the city initiated a pilot program with the company in 2007 that was promptly discontinued. According to a 2010 NBC Chicago report, the CPD cited the technology’s ineffectiveness as its reason for abandoning it; the company, meanwhile, claimed that the city of Chicago still owed them $200,000. Another pilot program was initiated in 2012, with sensors installed in “a total of three square miles” in Englewood and Harrison districts, said Jonathan Lewin, Chief of the CPD’s Bureau of Technical Services. (He did not mention the 2007 pilot program, although he’d been asked when Chicago’s relationship with SST Inc. began). Neither the allegations of ShotSpotter’s ineffectiveness, nor the legal and ethical concerns prompted by ShotSpotter software’s use in other cities, seem to have slowed Chicago’s zealous roll-out process. “This is the fastest pace of implementation…that [SST Inc. has] ever conducted,” Lewin said—a claim that SST Inc.’s Clark did not confirm by press time.

“[ShotSpotter is] really designed to tackle districts with violence. It’s built around gun violence,” said chief communications officer Anthony Guglielmi. Although the SDSCs are intended to be a more general crime-prevention strategy, they were also conceived because of 2016’s almost unprecedented gun crime. “There was an unacceptable level of gun violence in 2016, and I think the department and the mayor recognized that something had to be done,” Lewin added. “I think the SDSCs are our signature crime fighting strategy.”

The CPD has been quick to embrace the program. On January 7, the SDSC in Englewood was launched. ShotSpotter and POD programs were expanded, and cell phones with access to real-time crime data were issued to cops in that district. Between January and July CPD launched SDSCs in Harrison (11th), Gresham (6th), Deering (9th), Ogden (10th), and Austin (15th)—all tier one districts. By June, CPD and Mayor Emanuel’s office had already begun to attribute declining violent crime rates in Englewood and Harrison to the SDSCs.

In September, CPD announced plans to expand the program into six tier two districts—Wentworth (2nd), Grand Crossing (3rd), South Chicago (4th), Calumet (5th), Chicago Lawn (8th), and Grand Central (25th). Each expansion costs around $1.5 million, making the total cost of the project $18 million, which Guglielmi said was raised through leveraging both city and private funding. The nerve centers should be online in twelve of Chicago’s twenty-two districts by the middle of 2018, Guglielmi added. Once installation is complete, almost 130 square miles of the city will be within earshot and eyeshot of the Chicago Police.

Watch Weekly staff writer Michael Wasney discuss this story on the January 11 episode of CAN TV’s Chicago Newsroom:

What is $18 million buying the city? Guglielmi broke down the SDSCs’ “three pronged” approach.

First, there’s “the gunshot detection, that’s ShotSpotter.” ShotSpotter technology is actually licensed out and operated by SST Inc. for an annual fee, a service which they now provide for over ninety agencies around the country. The service typically costs about $65,000 to $90,000 per square mile, per year, not including the fees the company charges to install its sensors.

Some, including a 2016 Forbes magazine article, have called the company’s 2011 transformation—from what Clark called “a premise-based high price system” into a subscription service—a political and economic maneuver more than one meant to improve its product. The change allows the company to retain ownership of their data and possibly to sell it later. According to Lewin, the arrangement is in the best interest of the city too, because the city “doesn’t have to worry about maintenance” while ensuring “contractual service levels that [SST Inc.] has to meet.”

“The second thing that’s happening is the ShotSpotters are integrated with our crime cameras,” Guglielmi said. PODs were originally installed in 2003 in some of the city’s most high-crime areas. They are now being integrated with ShotSpotter using a software called Genetec. Once a sensor pinpoints a gunshot, cameras can be directed to swivel towards the location of the source. Every POD in a district can be accessed from that district’s SDSC.

“The last piece of it is HunchLab,” said Guglielmi. It’s the CPD’s new predictive policing software that, like ShotSpotter, is licensed to the police department by its developer Azavea. HunchLab uses environmental risk factors—weather, season, proximity to institutions like liquor stores or banks, and other predictor variables—combined with historical crime data and what it calls “socioeconomic indicators” to predict crime in an area. HunchLab’s use of environmental risk factors draws on a method called Risk Terrain Modeling (RTM). Recent studies testing other RTM software had statistical success in predicting crime. Azavea itself has not yet released any formal studies on the effectiveness of its approach.

The CPD brought in the University of Chicago’s Crime Lab as a “data partner” to perform quality-assessment checks on its own. According to Guglielmi, the CPD was able to do so with funding it received from the Department of Justice. Research managers at the Crime Lab said that they were working with CPD to implement SDSCs and facilitate the smooth rollout of SDSCs in tier two districts. Although the Crime Lab has partnered with the CPD before, Kim Smith, a research manager at the Crime Lab, said, “This is certainly [the Crime Lab’s] biggest technical assistance to date.”

Guglielmi maintains that “[the Crime Lab] is independent” from the department, despite the fact that Crime Lab analysts are now working side by side with police officers in SDSCs to provide them with a real-time statistical hand. The CPD’s contracting of Crime Lab to crunch numbers is nothing new, however, nor is their confidential data sharing agreement, which bars the lab from sharing any of the data that underlies its findings. It makes their research into a sort of black box: Smith declined to say whether the SDSCs—or individual elements of them, like ShotSpotter—had a statistically significant role in the decline of shootings from 2016 to 2017, saying that they weren’t “sure that we’re ready to make that public yet.”

Lewin, however, was more open about their findings, saying that they now had enough information from Englewood—a good “barometer,” in his words—to ascertain that its SDSC is doing something positive for the district.

“It’s been running long enough, so there’s enough data collected in the performance period now to say with some validity that we’re pretty sure the SDSC process itself has contributed to the crime reduction [in Englewood],” he said.

Lewin explained the Crime Lab’s methods: they created a “synthetic control” model, a projected version of Englewood patched together from other districts in Chicago without the SDSC technology, but similar to parts of Englewood in other respects. Statisticians were thus able to project the hypothetical crime rate for the neighborhood had an SDSC never been installed. By November, crime in that projected Englewood had diverged enough from the real one to make the statistically significant statement that, indeed, the SDSC process was an important contributor to the declining crime rate in the neighborhood.

Lewin listed off numbers from other districts, although none yet have passed similar tests of statistical significance. “Seven and eleven, which were the first two to go live together, are down thirty-two percent in shootings as of the end of October. All the SDSC districts are down twenty-four percent. If you take the six SDSC districts out of the city, the city is down twelve percent.” He put it another way: “The districts that used to drive up the violence, which are the tier ones, are now driving it down.”

Residents in Englewood have noticed a decline as well. Chauncey Harrison, Public Safety Liaison for the nonprofit Teamwork Englewood, said that “[the SDSCs have] been a good help, and I think it has been in many ways a positive step in the right direction, from a public safety standpoint in the community.”

However, Harrison said that he doesn’t think “the ShotSpotter technology is…necessarily leading the charge [in terms of] reducing nonviolent offenses,” although he’s noticed a decline in those too. Indeed, that has been a “research challenge,” as Lewin put it, for the CPD and the Crime Lab: figuring out what elements of the SDSC system are doing what. “It’s hard for us to use our typical evaluation methods to determine which components are contributing to the reduction, if they are,” Smith said. “Because everything was kind of rolled out at once, and ShotSpotter was not even rolled out at the same time across districts, it’s hard for us to isolate the impacts.”

It’s an important concern to address, given the questions of efficacy that have surrounded ShotSpotter’s implementation in other cities, and the cost the police department will be paying each year for the technology—at least $6.5 million per year in subscription fees for ShotSpotter alone, if it isn’t expanded into more districts.

It’s hard to say how effective ShotSpotter is in Chicago based on results in other cities and studies, mainly because many of those results have contradicted each other. One 2006 study conducted by the National Institute of Justice found that the technology could detect up to 99.6 percent of gunshots, while detecting 90.9 percent of them within forty feet of where they were actually fired. SST Inc. itself actually advertises a less precise locational accuracy, purporting to detect gunshots eighty percent of the time, and pinpoint them within twenty-five meters (a little over eighty feet) of where they were actually fired.

What has been found, however, is that these numbers vary greatly from city to city, in large part depending on local factors like topography, the presence of tall buildings, and even temperature and humidity. Earlier this year, it was found in a court case in San Francisco—a notoriously hilly city, and one of SST Inc.’s customers—that the sensors were not, in fact, as accurate as the company claimed. ShotSpotter evidence was used to pinpoint the location of a gun crime; later evidence showed that ShotSpotter’s triangulation of the event was a block off from where the actually crime was committed. Clark, SST Inc.’s CEO, subsequently was quoted in a San Francisco Examiner article admitting, “the eighty percent is basically our subscription warranty, as you will. That doesn’t really indicate what someone will experience,” but boasted that it is usually better.

Another concern that often crops up pertains to ShotSpotter’s effect on the clearance rate—whether or not an alert actually translates into an arrest. It’s a qualm that the Forbes article explores in detail using data it obtained from agencies in seven cities around the country that use ShotSpotter. Prior to the article’s publication, SST Inc. implored its customers not to release any information to Forbes.

Some cities’ experience with the technology was more promising than others: In Milwaukee, 10,285 ShotSpotter alerts translated into 172 arrests; in San Francisco, however, 4,385 ShotSpotter alerts translated into only two arrests. As of yet, it’s unclear how ShotSpotter has affected Chicago’s clearance rate. “That’s a specific measure that we haven’t done yet,” Lewin said.

Last month, the Weekly requested data from the CPD and the city’s Office of Emergency Management and Communication (OEMC) for all ShotSpotter events and related information. The CPD, as of press time, is three weeks overdue on its response. OEMC, however, provided data from February—when it says it began logging ShotSpotter alerts—to November 18. OEMC also provided contemporaneous data for all citizen and police reports of gunshots, and whether the alert resulted in a case being opened by CPD detectives.

The vast majority of alerts received from SST Inc.’s headquarters to CPD resulted in dead ends: no evidence found, and no reason to begin a case, let alone make an arrest. Of 4,814 unique ShotSpotter-linked events identified by the Weekly in OEMC’s data, just 508—a little over ten percent—resulted in the CPD finding enough evidence to open an investigation. This is roughly analogous with the rate of cases opened from solely human-reported gunshots across the entire city for the same time period—nearly fourteen percent—bringing into question how much more effective ShotSpotter truly is.

SST Inc. often claims that most gunshots go unreported in urban environments, but in Chicago, that is not borne out by our data. Of the 508 ShotSpotter alerts that lead to opened cases, 435—eighty-five percent—were also reported within five minutes by civilian calls to 911, police reports, or other on-the-ground witnesses. On the other hand, a full sixty percent of miscellaneous or unlabeled ShotSpotter-linked events were attributed to ShotSpotter alone. However, ShotSpotter was slightly faster (about 2.2 seconds) than its human competition, which can be the difference between life and death with a traumatic injury like a gunshot.

By the Numbers: How the Weekly wrangled raw data into insights on ShotSpotter’s effect on crime reporting across the city

In November 2017, South Side Weekly requested data for all incidents related to ShotSpotter detection from the Office of Emergency Management and Communication (OEMC). The Weekly received a log of all ShotSpotter alerts, 911 calls, and police reports related to gunshots from February 2017 to November 18th, 2017 – in total, nearly 65,000 records from across the city. In order to reach the most accurate representation of ShotSpotter’s effectiveness, the Weekly undertook significant cleaning and analysis to distill the records into discrete gunshot incidents.

When a gunshot goes off, several reporting avenues are spurred to action – concerned citizens who called 911, ShotSpotter detection, and police officers who witnessed the act. The Weekly combined records that occurred within a quarter of a mile of each other within five minutes. ShotSpotter claims to detect gunshots from up to 25 meters, or 0.02 miles; the Weekly chose a larger range to account for citizens hearing gunshots from a farther distance and the potential for a scattered range of ShotSpotter sensors. From these “clusters” of records we were able to identify 4,814 gunshot incidents. The clustering method also allowed us to see which events were detected solely by ShotSpotter sensors, and which would have been reported to the police even if they technology were not installed.

64,880: total number of records received from OEMC

6,712: number of ShotSpotter activations usable for our analysis

4,814 discrete gunshot events

4,158 resulted in an empty or “miscellaneous” disposition code

2,924 of those were solely triggered by a ShotSpotter alert

508 ShotSpotter alerts were then pursued as CPD cases

73 of those were only detected via ShotSpotter, with no other police or civilian reporting

Most common crimes detected by ShotSpotter:

1. Shooting victim with a handgun

2. Unlawful discharge of a handgun

3. Criminal damage to vehicle

4. Assault armed with a handgun

Data analysis by Jasmine Mithani, Pat Sier, and Sam Stecklow

Then there are the legal and ethical questions that the use of ShotSpotter has engendered in other cities. The ACLU, for example, has voiced concerns about the potential threat to an individual’s Fourth Amendment rights such recording devices could pose, particularly if those devices have the capacity to record voices.

Historical examples demonstrate that the technology does have that capacity: in 2011, sensors installed in New Bedford, Massachusetts captured snippets of conversation on either side of the actual gunshot (the ShotSpotter server, called LocServer, downloads sound from two seconds prior to and four seconds after the actual boom). The sound bite was subsequently used in a court case to convict one of the suspects—a strategy that the defense counsel alleged was illegal under both Massachusetts’s wiretapping laws and their client’s constitutional Fourth Amendment rights. In 2010, a similar scenario played out in an Oakland courtroom.

Clark dismissed these as “edge cases,” anomalous incidents that are nothing to worry about—that the technology is designed to pick up only “things that go bang.” But given the particular stringency of Illinois’s Eavesdropping Statute, reoccurrence of these “edge cases” might be just what the city needs to worry about. (The Weekly found no cases of this occurring yet in Chicago).

Jay Stanley, senior policy analyst at the ACLU, sat down with CEO Clark to probe more into ShotSpotter’s capacity as an eavesdropping device. His takeaway from the discussion, which was mostly positive, was posted on the ACLU’s website. He reported the following: that the sensors are constantly recording audio; that audio is retained for “hours or days not weeks” after the event, according to Clark; and that any sound bite confirmed to correspond to a gunshot—again, which contains two seconds prior to the event and four seconds after—is sent to the customer agency, along with the alert.

While Clark “was very open and forthcoming,” Stanley still expressed concerns about the length of time that audio is stored, the proximity of recording devices to homes, and that the company had not released its source code. To the last point, Clark offered to share with the ACLU a confidential (NDA-protected) version of the company’s code, although the ACLU did not end up undertaking the review. Ultimately, however, Stanley writes that he’s not “losing sleep over this technology at this time,” mostly because Clark seemed to realize “that his company sits on the edge of controversy,” and it is thus “his incentive not to allow it to be turned to broader ends”—that is, to the ends of state surveillance.

Predictive policing, too, has its fair share of issues. As University of Pennsylvania doctoral candidate Aaron Shapiro notes in an article for the journal Nature, “confounding factors make it impossible to measure directly its effectiveness at reducing crime.” Effectiveness aside, he brings up the concern that “[e]quating locations with criminality amplifies problematic policing patterns.” In other words, “geographical” and “socioeconomic” factors incorporated into HunchLab’s RTM model could be nothing more than proxies for other biases in the data which have the potential to encourage the practice of racial policing.

The ACLU of Illinois has leveled similar critiques—that it encourages racial profiling by police—against the CPD’s Strategic Subject List (SSL), another predictive strategy it began in 2013. SSL is a list of individuals generated by an algorithm that guesses which ones were likely to be involved in violent crime. Disregarding the ethical quandaries posed by such a list—which the Weekly has explored in the past—it’s also unclear if the list is even statistically meaningful: a study conducted by the RAND Corporation, a think tank, found that individuals on the SSL were no more likely to be involved in violent crimes than individuals not on the list. (CPD disputed these claims, and RAND is working on a second study of the program).

Harrison isn’t too concerned with the possibility that CPD has overstepped their legal and ethical bounds by installing an SDSC and expanding ShotSpotter in Englewood. “I think the rules are being followed,” he said. “I think the laws are being upheld as well,” though he added that “there has to be a balance, because you don’t want to have so much technology that people feel that their privacy is being violated.”

But he was adamant that technological policing is by no means a comprehensive violence-prevention strategy: “You can’t just attribute the reduction in shootings to the ShotSpotter technology and the other technological upgrades within the Englewood community.” Speaking supportively of the CPD’s 2017 attempts to revive its flagging CAPS program, Harrison said, “CAPS is doing exceptionally well in the 7th district.”

But policing in general is no panacea, either. “The thing is, you can’t attribute that success singlehandedly to police,” Harrison said. “When you’re trying to address violence in the community, police have a role to play, parents have a role to play, prevention has a role to play, and partnerships have a role to play.”

This multifaceted approach to violence in communities is important, especially because the jury is still out on the CPD’s new technologies. The most optimistic of results, with regards to the SDSC process, are the Crime Lab’s findings in Englewood, according to Lewin: that the reduction of violence can in fact be attributed to the new technologies being deployed there, even if it’s unclear which components—ShotSpotter or otherwise—are actually making the difference. “That’s what the University of Chicago, and we together are really focused on trying to measure,” Lewin said.

Also worth remembering is that it will take time to understand the long-term consequences of these changes, a caveat that Clark even admits with regards to his own technology: “You’ve got to be deployed two years” at least to see and understand its effectiveness in a given city, Clark told DNAinfo. And while its first year looks promising when compared to 2016, 2017 still looks like the next most violent year for Chicago since 2002—even if it’s the tier one districts bringing down this year’s violence. The true test, then, is still to come: to see whether or not violence will continue to decline in 2018 and beyond, to a level that isn’t just deemed satisfactory because it’s lower than an exceptionally violent year that came the year before.

Data editor Jasmine Mithani, webmaster Pat Sier, and contributing editor Sam Stecklow contributed reporting to this article.

Correction, August 31, 2018: An earlier version of this article stated that the average time difference between a ShotSpotter notification of a gunshot and a 911 call of the same gunshot was about thirty-two seconds. It is 2.2 seconds.

Every couple has a different style. Some want specific color schemes or a theme, others are looking more for a specific feel or vibe.